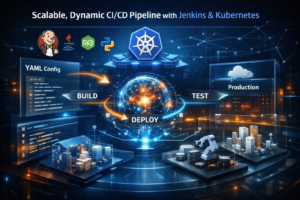

Building a Scalable, Dynamic Multi-Technology CI/CD Pipeline with Jenkins and Kubernetes

Modern engineering teams rarely work with a single technology stack. A typical organization might maintain Java microservices, Python APIs, Node.js backends, React frontends, and even infrastructure-as-code projects — all within the same ecosystem. Managing CI/CD pipelines for such diversity can quickly become complex, repetitive, and difficult to scale.

To address this challenge, we can design a scalable, dynamic CI/CD architecture using Jenkins and Kubernetes that supports multiple technologies through a unified and configurable approach.

The Problem with Traditional Pipelines

In many setups, each repository contains its own Jenkinsfile tailored to the specific technology it uses. While this works initially, it creates several long-term issues:

-

Repeated pipeline logic across repositories

-

Increased maintenance overhead

-

Inconsistent build standards

-

Dependency on DevOps engineers for minor changes

-

Difficulty scaling across teams and projects

As the number of repositories grows, managing and updating pipelines becomes time-consuming and error-prone.

What’s needed is a system that centralizes pipeline logic while still allowing flexibility for different technology stacks.

A Dynamic and Centralized Approach

The core idea behind a scalable CI/CD system is simple:

Separate pipeline logic from project-specific configuration.

Instead of embedding complex pipeline code inside every repository, we can:

-

Maintain shared pipeline logic in a centralized Jenkins shared library.

-

Store only minimal configuration inside each application repository.

-

Dynamically provision build environments using Kubernetes.

This approach significantly reduces duplication while improving scalability.

Centralized Pipeline Logic with Shared Libraries

Jenkins Shared Libraries allow you to define reusable pipeline components. Instead of writing pipeline steps repeatedly, you define them once and reuse them across projects.

The shared library can handle:

-

Source code checkout

-

Dependency installation

-

Build execution

-

Unit testing

-

Docker image creation

-

Artifact publishing

-

Deployment steps

Each repository simply references the shared pipeline, avoiding repeated code.

Repository-Level Configuration Using YAML

Rather than writing Groovy pipeline scripts for every project, repositories can include a lightweight configuration file — for example, buildParams.yaml.

This file defines:

-

Technology type (Java, Node.js, Python, etc.)

-

Build commands

-

Test commands

-

Docker image details

-

Resource requirements

-

Deployment preferences

The shared pipeline reads this configuration file and executes the correct steps dynamically.

This makes onboarding new services incredibly simple:

Developers only need to define configuration parameters — not pipeline logic.

Dynamic Agent Provisioning with Kubernetes

One of the most powerful parts of this architecture is integrating Jenkins with Kubernetes.

Instead of using static Jenkins agents, we use dynamic Kubernetes pods that are created on demand.

Here’s how it works:

-

A pipeline starts.

-

Jenkins requests a Kubernetes pod.

-

The pod is provisioned with the required container image (based on technology).

-

The build runs inside that pod.

-

Once the job completes, the pod is destroyed.

This approach provides:

-

Automatic scalability

-

Isolation between builds

-

Efficient resource utilization

-

Reduced infrastructure cost

Each build environment is clean, temporary, and tailored to the specific project.

Multi-Technology Support

Since each project specifies its own configuration, the same pipeline can support multiple stacks:

-

Java builds using Maven or Gradle

-

Node.js builds using npm or yarn

-

Python builds using pip or poetry

-

Docker-based services

-

Frontend applications

The shared pipeline detects the technology type from the configuration file and loads the appropriate container image in Kubernetes.

This eliminates the need to maintain separate pipelines per technology.

Benefits of This Architecture

1. Scalability

New repositories can plug into the CI/CD system with minimal setup.

2. Maintainability

Pipeline updates happen in one place — the shared library.

3. Developer Independence

Teams don’t need to understand Jenkins Groovy syntax. They simply define configuration parameters.

4. Resource Efficiency

Kubernetes ensures compute resources are used only when necessary.

5. Consistency

All projects follow standardized CI/CD practices.

Self-Service CI/CD

This architecture enables a self-service DevOps model.

Developers:

-

Push code

-

Update configuration

-

Trigger builds

The pipeline automatically handles the rest — building, testing, containerizing, and deploying — without requiring DevOps intervention for each change.

This dramatically reduces bottlenecks and accelerates delivery.

High-Level Workflow

-

Developer pushes code to repository.

-

Jenkins pipeline is triggered.

-

Shared library reads project configuration.

-

Kubernetes dynamically provisions a build pod.

-

Build and test steps execute.

-

Docker image is created and pushed.

-

Deployment process begins (if configured).

-

Pod is terminated after completion.

Everything happens dynamically and automatically.

Final Thoughts

As organizations scale, CI/CD systems must evolve from rigid, technology-specific pipelines to flexible, centralized, and dynamic architectures.

By combining:

-

Jenkins Shared Libraries

-

Repository-driven configuration

-

Kubernetes dynamic agents

You can create a powerful, multi-technology CI/CD framework that is scalable, maintainable, and future-ready.

This approach not only simplifies DevOps operations but also empowers engineering teams to move faster with confidence.